What Is—and Isn't—*Risk* and *Uncertainty*?

The newsletters in this Substack concern decisions under risk and uncertainty, the domain most relevant to the gambling metaphor described in the previous post. But what are risk and uncertainty? The answers might seem obvious since the terms are used in everyday language, but in behavioral decision theory each term has specialized usage. This essay distinguishes risk and uncertainty from each other and from some other types of decisions they are commonly contrasted against.

Table of Contents

Decisions Under Certainty

Decisions under certainty are choices between various items or outcomes. Choosing between jams, yogurts, or wines in a market, between vacation packages at a travel agency, or between available car models at a dealership are all examples of decisions under certainty. Although such decisions are referred to as “certain,” that is an idealization. They are not really certain. We cannot be sure that a presented item will really be provided as promised. There is also uncertainty about how much utility a choice will have even if it is provided as promised I might choose a company trip to Hawaii over a $1000 Christmas bonus, but in making that choice, I am making a guess that the trip to Hawaii will have more subjective value to me than the $1000. With that in mind, it should be noted that decisions under certainty is more a label about what the researchers themselves are trying to study than it is about something inherent to the decisions themselves. Such research often considers how different kinds of attributes are traded off (speed versus gas mileage versus price when buying a car, for example), with the intention that study participants take for granted that the given attributes are certain.

Decisions Under Risk

A narrow definition

In behavioral decision research, risky decisions usually refer to choices between options where at least one of the options has uncertain outcomes (likelihoods greater than 0% and less than 100%). For example: “With Option A, you get $100 for sure. With Option B (the risky option), you have a 50% chance of getting $0 and a 50% chance of getting $200. Which option would you prefer?” With risky decisions, likelihoods are known, or at least available.

That specialized usage of risk differs from every-day usage of the term in at least three ways. First, folk conceptions of risk rarely include known probabilities. Living near a live volcano might be considered risky even if those choosing to do so do not know the likelihood of a life- or home-threatening eruption. Second, risk tends not to be about trivial outcomes (magnitude matters). Third, risk implies potential costs and not just potential gains (valence matters). If someone were to say, “You could either win $1000 or lose a penny; are you willing to take that risk?” a reasonable response might be, “What’s the risk?” since the potential cost is trivial, despite the fact that the potential gain is significant. Scholars who study risk often adopt a broader definition that entails significant potential costs, aligning more closely with everyday usage. That said, in behavioral decision theory, the narrower conception of risk described above tends to prevail. The extensive amount of research focused on probabilistic outcomes has made it useful to differentiate between cases when probabilities are available (risky decisions) and cases when probabilities are unknown and the best one can do is to estimate them (uncertain decisions). Below are two examples of findings given this narrow conception of risk.

Example 1: Overweighting small likelihoods and underweighting large likelihoods

Description

Daniel Kahneman was awarded the Nobel Prize in Economics in 2002, in large part for his work with Amos Tversky developing prospect theory, a psychological model that integrates given outcome probabilities with the magnitude (size) and valence (gains or losses) of outcomes.1 One risk-relevant finding from prospect theory is that study participants have tended to overweight very small likelihoods (approaching but greater than 0%) and underweight large probabilities (approaching but less than 100%). Shark attacks provide a good real-world example, where the mere possibility of an attack, however remote, is enough to keep many people out of the ocean.

Criticism

See Hertwig et al.’s wonderful 2002 paper in Psychological Science, “Decisions from experience and the effects of rare events in risky choice,” for an important qualifier to the described finding. When people learn about outcome likelihoods from experience, rather than having those likelihoods stated as was the case in the development of prospect theory, research participants tend to do the opposite: overweighting large probabilities (less than 100%) and underweighting low probabilities (greater than 0%). On the one hand, that may be obvious when imagining the fat turkey trying to guess how good the day will be on Thanksgiving morning, after having woken up ever day being overfed—paraphrasing Taleb’s famous example from The Black Swan. Turkeys of course have no way to know the likelihood that the next day will be different from all the other days other than what they have experienced thus far. But inverse finding for decisions from experience is relevant even for outcomes that we decision makers may know are theoretically possible or theoretically not certain. Imagine a young doctor who may have learned about extremely rare diseases from text books while training to become a doctor. Those books will have mentioned the rarity of those exotic diseases; but the doctor, seeing patients with associated symptoms, may give such diseases nearly equal plausibility as more common ones, simply because both may have been given similar emphasis and study time in the textbook (indeed, the rare diseases may get more emphasis since the common ones will already be more familiar to doctors in training). That looks like a decision from description and matches the findings from prospect theory. A doctor nearing retirement, however, who has seen thousands of patients, and has long forgotten textbook training may not give rare disease that fit the symptoms even fleeting consideration, even after eliminating other more common diseases that fit the symptoms. That finding is directly contrary to prospect theory and suggests an important distinction in how we learn about likelihoods.

Example 2: Risk aversion in the face of gains, but risk seeking in the face of losses

Description

Another example from prospect theory is the now-qualified finding that people are risk seeking in the face of losses (preferring risky decisions over certain ones) but risk averse in the face of gains (preferring certain options over risky ones). The most famous example demonstrates this phenomenon as part of evidence for framing effects in what has become known as The Asian Disease Problem. Framing effects occur when two versions are considered indistinguishable in terms of meaningful content, but differ in terms of how that content is described or presented. In the Asian disease problem, two groups of participants are both told to “imagine that the U.S. is preparing for the outbreak of an unusual Asian disease, which is expected to kill 600 people.”

One group of participants was given a gain frame, where outcomes are described as lives being saved (rather than as deaths). That group is given the choice between two treatment options and asked which one they would prefer. Option 1: “200 people will be saved.” Option 2: “There is a 1/3 probability that 600 people will be saved and a 2/3 probability that no people will be saved.” If you do the math for Option 2, it turns out that the expected value (the long-term average) is equivalent to the the sure thing of Option A: 200 lives will be saved on average. Among those participants, 72% chose Option 1, the certain choice to save 200 lives; only 28% chose to gamble with the possibility of either saving everyone or saving no one.

The second group of participants was given a loss frame. Their Option 1 was, “400 people will die” (instead of 200 people being saved); and their Option 2 was, “there is a 1/3 probability that nobody will die, and a 2/3 probability that 600 people will die” (instead of there being “a 1/3 probability that 600 people will be saved and a 2/3 probability that no people will be saved”). Again, the expected value for the gamble (Option B) was the same as the certain outcome (Option A): 400 people die. Among those participants, however, 78% preferred the risky Option 2 and only 22% preferred the certain outcome, Option 1.

These findings might not initially seem compelling. After all, 200 lives saved is not directly comparable to 400 people dying; arguably it’s not even comparable to 200 people dying. But what is important about the study and makes it a compelling example of framing is that both Option 1 and Option 2 turn out to be identical across the two groups in terms of lives saved and people dying and the likelihoods or certainties associated with each. Given that both groups are told that the Asian disease is expected to kill 600 people, “Saving 200 people” (Group 1’s Option 1) is mathematically identical to “400 people dying” (Group 2s Option 1). In both cases, 200 people will be saved and 400 people will die given the 600 people whose lives are at stake.

Similarly, “a 1/3 probability that 600 people will be saved and a 2/3 probability that no people will be saved” (Group 1’s Option 2) is mathematically identical to “a 1/3 probability that nobody will die and a 2/3 probability that everyone will die” (Group 2’s Option 2). If there’s a 1/3 probability that 600 people will be saved (Group 1’s scenario), that means that there is a 1/3 probability that no people will die (Group 2’s scenario), given that the stated total population was 600 people. And if there is “a 2/3 probability that no people will be saved” (the second half of Group 1’s Option 2), that means that there is “a 2/3 probability that 600 people will die” (the second half of Group 2’s Option 2). Other than framing—using language that describes the outcomes in terms of how many people live or in terms of how many people die)—Option 1 and Option 2 are identical between groups, and the two options themselves are identical in terms of expected outcome value, except one (Option 1) is certain, and the other (Option 2) is risky. The participants, however, show vastly different preferences between groups, as evidenced by the 72% of participants in the gain frame who preferred the risk-free Option 1 and the 78% of participants in the loss frame who preferred the risky Option 2.

Criticism

The particular example has been expanded on in a variety of ways pointing to the fact that preference for risk in the face of losses and sure things in the face of gains depends on the subjective importance given to the items under consideration. If consequences are pennies on the dollar lost or gained, participants (for whom pennies are presumably deemed insignificant) tend to be risk seeking even in the face of gains (called the “peanuts effect”). At the other extreme (from peanuts), if the description of lives lost is highlighted to emphasize the sacredness of the lives lost, for example by showing images of individual lost lives, participants become risk seeking even in the face of gains (presumably with the idea that losing even a single life is deemed unacceptable, and a gamble at least makes that a possibility; see Tanner & Medin, 2004, “Protected values: No omission bias and no framing effects”). It seems there may be a sweet spot between mattering too little and mattering too much during which framing matters most, at least with respect to reversing preferences between certain and risky choices.

Alternatively, there have been several criticisms of the particular framing of the two scenarios in the Asian Disease Problem which seem to introduce several potential mediating variables that might largely explain the findings beyond people being risk averse in the face of gains and risk seeking in the face of losses. In particular, (1) the 600 total lives comes early in the set up and it seems reasonable to imagine that a certain percentage of participants forgot—or simply never attended to—that value when evaluating the hypothetical options; and (2) without integrating that total population size, 200 people being saved and 400 people dying are not identical at all, nor are the two versions of the gamble (Option 2) which potentially sounds far worse with Group 1 than Group 2 assuming one does not do the math integrating the total population. An alternative version where both scenarios involve a matched certainties and matched probabilities—lose or gain 300 lives for Option 1 versus a 50% chance of losing or gaining either 600 lives or 0 lives for Option 2—would presumably have a far less compelling result given that Tversky and Kahneman’s framing has an unusually large effect size (see, for example, Kühberger, 1998, “The Influence of Framing on Risky Decisions: A Meta-analysis”).

Risky decision making is uncommon in the wild

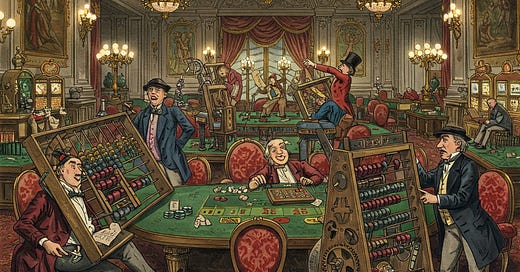

As suggested already—despite how often risky decision making has been studied experimentally—most decisions “in the wild” do not entail stated or known probabilities. One notable exception, however, is casino gambling; perhaps not surprising since gambling is the metaphor on which models of decision making under risk and uncertainty have been based. Although even with casino gambling the probabilities are rarely as transparent as the gambling metaphor would suggest, in many casino games outcome probabilities can be exactly calculated.

In the three casino games that make up the primary focus of this Substack—blackjack, roulette, and slot machines—this is true in distinct ways. In roulette, calculating outcome likelihoods is straightforward; a high-school knowledge of probability theory would be enough to assess outcome likelihoods of all roulette choices. In blackjack, the outcome likelihoods change as a function of the distribution of cards remaining to be dealt. Although they can theoretically be calculated, doing so requires computer analysis unavailable in the casino. Such calculations in real time in the casino are beyond the cognitive capacity of any known human. Analyses based on those likelihoods can nonetheless be used by researchers to evaluate how well casino blackjack players choose, assuming their goal is to maximize expected return.

In slot machines, the likelihoods are programmed into the software and—within legal limits that vary by jurisdiction—can be chosen by the casino management. But those likelihoods are entirely inaccessible to the slot-machine players themselves and to scholars and can only be guessed at based on previous outcomes, legal bounds, and published win-loss percentages, and individual reports which vary widely. Because the variability in slot machine outcomes is extremely high (with some progressive slot machines in Las Vegas offering jackpots in the millions of dollars), it is not possible to reliably estimate outcome likelihoods. The challenge is made harder by the fact that slots are designed such that the surface structure of the game does not map on to the actual outcome likelihoods (see, for example, Turner & Horbay, 2004, “How do slot machines and other electronic gambling machines actually work?”). Near misses are programmed into the software so that stops above and below jackpot symbols are more likely than the physical structure of the reels would suggest, as are stops on the jackpot symbol for the first and second reel.

Despite the challenges of integrating probabilities, particularly in blackjack and slot machines, consideration of outcome likelihoods is central to all three games. At the same time, despite the obvious importance of outcome probability, it is also less relevant than might be expected given the major role of probability in rational choice theory. Even in roulette, a game where probabilities can be perfectly calculated, strategies and beliefs that are only loosely connected to outcome likelihoods play an important role.

Decision Making Under Uncertainty

A narrow definition

“Risky decision making” is just one half of the duo of concepts that has been defined in an overly narrow way so as to differentiate between (1) decisions when likelihoods are known or knowable (risky decisions) and (2) decisions when likelihoods are unknown and can only be subjectively estimated. “Uncertainty” has come to refer spectifically to that second option. Of course, in everyday language and for many scholars, any non-definite outcome, whether the probabilities are known or unknown could be called “uncertain,” as could other aspects of uncertainty not specifically about outcome likelihoods (such as uncertainty about which choices are available or which outcomes might arise from each choice). In behavioral decision theory, however, this narrower meaning of uncertain has become useful to emphasize the dichotomy between known and unknown outcome probabilities.

Example of uncertainty even in a domain with given outcome probabilities

As has been suggested above, even with putative decisions under certainty, not just given likelihoods matter; uncertain likelihoods also matter (recall the idea that even with decision making under putative certainty, decision makers do not really know they are getting what has been promised, nor do they know how much they will like what has been promised if they get it. Recall that trip to Hawaii that might end in heartbreak. As such, as with decisions under certainty and decisions under risk, the emphasis on uncertainty here may says more about what the researchers are trying to study than about whether or not outcome likelihoods are probabilistic and whether those probabilities are known: at some level, all decisions involve some degree of uncertainty. Consider the following personal example which demonstrates the role of uncertainty about outcome likelihoods even when theoretical likelihoods have been exactly calculated.

A professional gambling friend had devised an optimal strategy for a blackjack-type game only offered in Czech casinos. I double-checked the calculation then shared it with a mathematician and blackjack computer-modeling colleague and we all agreed that the math was sound. It turned out that the game was beatable even without keeping track of cards removed from play (that is, even without counting cards). Several casinos in Prague offered the game, so one of the challenges was to choose where to play. The game differs from blackjack in some key ways, but most importantly in that the variability in outcomes is far higher, so that even with a positive expected return, any single gambling session tended to end with much higher wins, or much higher losses, than the mode gambling sessions in blackjack. From a theoretical calculation of expected return, taking into account outcome variability and the bankroll required, this game had the potential to pay handsomely and be well worth the risk given my own income at the time. On the other hand, there are several “uncertainties” that make those calculated likelihoods less relevant and likelihoods that can only be subjectively estimated far more relevant. Below is a consideration of a handful of those issues.

First, despite our best efforts, the possibility remained that my friend had made a mistake in his calculations that neither of the two of us who carefully checked it uncovered. I knew from my own research on gambler’s strategies and beliefs that many gamblers developed sophisticated mathematical models that were compelling on first consideration but that ended up entailing mistaken assumption with disastrous implications for expected return. Casinos excel at designing games that promote mistaken assumptions. Recall the example of slot machines where the surface structure showing reel outcomes does not match the virtual structure programmed into a computer chip but invisible to players.

Second, there is human playing error. How many playing or betting mistakes might we make each hour, and how would that impact the expected return? In blackjack, it is important to hide the fact that you are counting cards, since casinos that recognize a player as a card counter can easily make it impossible to count cards. One of the strategies for doing so is to occasionally make poor plays that no card counter would ever make, such as betting high of the top of a freshly shuffled stack of cards, or varying one’s bet before the odds could possibly have changed enough to justify it. Blackjack has been analyzed enough to make justified inferences about the impact of such deviations from the ideal strategy. This blackjack relative, however, has much higher variability in expected return than blackjack, and so individual errors could have a far bigger impact on expected return. On the other hand, we did not need to count cards at all to get an advantage, so that human error was likely to be less common. We could only guess at the impact of such errors on expected return.

Third, there seemed to be a much higher likelihood of being cheated or robbed during or after gambling in Czech casinos than in Las Vegas. I had read about the evolution of casino gambling in the US enough to believe that the large corporate-owned casinos today had more to gain by keeping the games fair than by cheating players, but also that that was not always the case, especially when there were fewer customers and less regulation. Czech casinos are more like casinos from a time long passed in the US: they have far fewer gamblers and so they are at higher risk of losing money if a couple of big players have big wins. They are also less regulated, partly as a result, so that cheating is easier. In Las Vegas casinos, for example, dealers are required to periodically change the blackjack cards and to display every single card to the players before a fresh shuffle. If a player is concerned about a marked or damaged card, they can bring the attention to the casino and get a fresh deck or have the cards checked. In some casinos in Prague, they did not every display the cards to players and refused to show the cards when I had serious concerns about cheating. Similarly, Czech casinos remain more closely associated with organized crime. During the time of my research, one casino owner was shot dead in a tunnel; in another case, a bomb was set of at a casino venue associated with organized crime and the casino industry. Employees themselves sometimes discussed the role of organized crime and cheating openly, and dealer’s intentionally cheated to help us win when we started tipping them, something that would almost surely never happen in Las Vegas where dealers are watched as closely as players to ensure fair play, and criminal prosecution is a real possibility. How should we evaluate the possibility that the casino is cheating in the game (such as by introducing extra cards that are unfavorable to the players)? Or the likelihood that someone may make a phone call when we leave the casino after a particularly successful night to get back that money in a less above-board way?

We did not have a clear way to assess those outcome likelihoods (or the potential negative values associated with them) into the decision. Our best guesses were, however, central to our choices about the casinos in which to play this particular game, about how much money we could safely arrive or leave with, and about whether to play the game at all. But in every case they were subjective estimates, and my best guess today—now that I have some distance—would be different than it had been then. Uncertainty prevails in the wild, even in the mathematically most well-defined games.

Radical uncertainty

The above narrow definition of uncertainty focuses on whether outcome likelihoods are known or unknown, but there are other aspects of decisions that tend to be uncertain in the wild other than just outcome likelihoods. In casino gambles, as in laboratory experiments, the range of possible choices tends to be well defined. But in most decisions in the wild, the range of choices is unknown, and arguably limited only by the imagination and by available resources and technology. What are my choices in deciding how to write an email? Whatever the answer, it is different now with the advent of LLMs than it was a couple of years ago. Just as choices are unknown, the possible outcomes of most choices are themselves unknown, even unknowable and—in some sense—unlimited, as are the values or utility associated with those outcomes. What will the utility of a trip to Hawaii be? That depends on whether I meet the love of my life or lose a limb to a shark, and on the combination of any other of the practically infinite number of events that might possibly occur during a trip to Hawaii. Going back to the blackjack-like casino game in the Czech Republic: where does the utility from the fun of trying to beat the casino at its own game come in? Or the danger of getting on the wrong side of a criminal gang? Or the utility (or disutility) associated with (not) having enough money to complete my dissertation research? Or the value of a good story associated with that game for future writing about that research? What role should the possibility that I will develop gambling problems play?

Gerd Gigerenzer has recently attempted to resolve this important ambiguity in the meaning of uncertainty by restricting the previous narrower definition of uncertainty to the term “ambiguity” and reserving the term “uncertainty” for this more radical sense of ambiguity common to decision making in the wild where choices and outcomes are uncertain, as is the utility that might be associated with envisioned outcomes (see “The rationality wars: A personal reflection,” 2024). It is not obvious to me that either term (ambiguity or uncertainty) is more well suited to either definition, and so rather than argue about correct terminology in a domain that is already infused with ambiguous meaning, I would tend toward simply recognizing the ambiguity of the terminology itself, and to trying to be clear in context. That said, Gigerenzer’s distinction between “small worlds” (well-constrained domains where choices, outcomes, and values are all well-constrained and transparent) and “large worlds” (largely corresponding to decisions in the wild, without such artificial constraints) is seems extremely important when considering decisions in the wild. Gigerenzer borrowed the small-world versus large-world distinction from Leonard Savage who used it to point out that his own simplifying model of rational choice, Subjective Expected Utility Theory, only holds up in a small subset of narrowly defined decision contexts (small worlds). Gigerenzer points out that such an observation calls into question the appropriateness of such normative models of rational choice for understanding and evaluating many—perhaps most—decisions.

My usage of risk and uncertainty throughout the Substack

I will tend to stick to the narrower senses of risk an uncertainty, where risk refers to cases where outcome likelihoods are available and uncertainty refers to outcome likelihoods that must be subjectively estimated. There are two main reasons, despite having just argued that a broader conception of uncertainty is important: (1) that is how the terms are commonly differentiated among behavioral decision scholars, and (2) unlike most decisions in the wild, gambling decisions tend to better fit the “small world” assumptions common to experimental studies. Choices, outcomes, and outcome values are well defined in most casino gambles. That said, as the example of the Czech blackjack-like game above suggests, it is more the rule than the exception that casino gambling choices end up involving the kind of radical uncertainty common to other large-world decisions, once the casino context is given careful consideration. That point will come up often throughout future essays in this Substack, since it turns out to be important for both understanding and evaluating gamblers’ decision processes.

Game-Theoretical Decisions

Many important decisions are interactive in the sense the best decision for one person depends on choices made by others, which are themselves uncertain. Casino poker is a good example, since the quality of each player’s choice depends on uncertain assessments of other players at the table. Game theory is not particularly relevant to blackjack, slot machines, or roulette, although there are a couple of exceptions. First, while other player’s choices do not impact outcomes in predictable ways, they do impact outcomes in unpredictable ways. If a player before me in blackjack decides to hit (take another card) or stand (stop taking additional cards), that will change the card I end up getting. And all of the blackjack players’ choices, including my own, will impact what cards the dealer eventually gets, which matters to the success of everyone at the table (see this summary of casino blackjack for context). Unless players are cheating, that impact will be random and unpredictable, but it is an impact nonetheless. Second, players themselves believe that random impact matters in a way that are not random and that can be used to one’s advantage, such that how other people play is seen by many to be an important factor.

Part of the value of sticking to games like blackjack, roulette, and slot machines is that they demonstrate how incredibly complicated even simpler decisions are, and do so with fewer degrees of freedom or potential confounding variables than game theoretical games such as poker. Without disrespect for scholars who focus on game-theoretical problems, this narrower domain turns out to be wonderful for moving the discussion from decisions in the lab to decisions in the wild while still allowing for meaningful commentary on the role of factors like environmental design, cultural and systems of meaning, and the wider conceptions of utility that are associated with such systems of meaning. As a result, game-theoretical decision making will rarely be considered in this Substack.

Only Kahneman received the prize, since Tversky had already passed away, but there can be no doubt that Tversky would have received it as well (see, for example, this New York Times interview with Kahneman from November 5th, 2002).