AfterHours Tales: CoreWeave Inc (CRWV)

🧠 Inside CoreWeave: The Infrastructure Behind Today's Most Powerful AI Models

When it comes to companies powering the AI revolution, CoreWeave stands out as a critical infrastructure provider worth watching. Though currently private, there are compelling reasons why understanding this GPU cloud computing powerhouse now could give investors a significant advantage.

1. Positioning for the Upcoming IPO

CoreWeave has officially filed for an IPO expected in early 2025, with reports suggesting a potential valuation of $25-35 billion. This represents a remarkable trajectory for a company that began as a crypto-mining operation and transformed into one of the most important AI infrastructure providers. By understanding CoreWeave's business model, technology advantages, and market position now, investors can develop informed perspectives before the IPO roadshow begins and Wall Street analysts publish their initial coverage.

2. Understanding the Real AI Infrastructure Play

While many companies claim to be "AI-focused," CoreWeave represents something more fundamental: the critical infrastructure that makes advanced AI development possible. By exploring its specialized GPU cloud services, industry-leading deployment speed, and unique approach to data center design, investors can distinguish between the hype surrounding AI and the essential building blocks that enable the technology to advance. This knowledge helps identify which companies are providing genuine value in the AI ecosystem versus those merely riding the trend.

We've consistently positioned ourselves ahead of the curve in the AI infrastructure sector. In December 2024, we highlighted Nebius before it became widely discussed, demonstrating our commitment to identifying critical players in the AI ecosystem before they reach mainstream attention. We believe companies like CoreWeave will be increasingly important as AI development accelerates and demands for specialized computing resources grow exponentially.

3. Evaluating the Competitive Landscape in AI Infrastructure

Understanding CoreWeave provides investors with a valuable benchmark to evaluate other players in the rapidly evolving AI infrastructure space. As companies like Lambda, Crusoe Energy, and RunPod compete for market share, knowing CoreWeave's technological advantages, pricing models, and customer acquisition strategies offers crucial context for assessing competitive positioning.

This knowledge becomes particularly valuable when evaluating potential investments in both public and private companies operating in adjacent spaces. For instance, how does Microsoft's Azure AI infrastructure compare to CoreWeave's specialized offerings? What advantages might Google Cloud or AWS have or lack when competing for AI workloads? By using CoreWeave as a reference point, investors can make more informed decisions about which cloud and infrastructure providers are best positioned for the next phase of AI development.

As AI continues to transform industries across the economy, the companies providing the fundamental computing power, like CoreWeave, will likely remain critical to the technology's advancement, potentially offering significant investment opportunities as they scale to meet the seemingly insatiable demand for specialized computing resources.

⚡ AI-optimized, human-verified: Our expert team carefully selected Premium market intelligence from Finchat's data. Explore now →

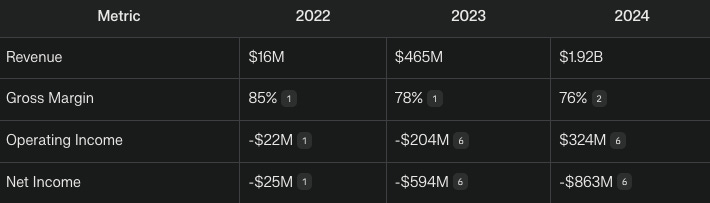

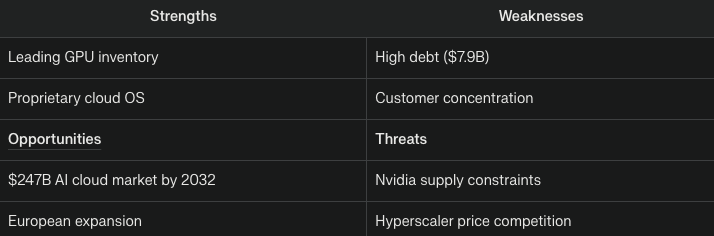

Thesis

CoreWeave has emerged as the essential infrastructure provider for the artificial intelligence revolution by creating a specialized cloud platform built specifically for GPU-intensive computing. Founded in 2017 by former energy traders who pivoted from cryptocurrency mining, CoreWeave has grown to become North America's largest private provider of Nvidia GPUs with 250,000 units. The company generated $1.92 billion in revenue in 2024 (growing an astonishing 737% year-over-year) and has secured $7 billion in future contracts. However, CoreWeave faces significant challenges, including $7.9 billion in debt and heavy reliance on Microsoft, which accounts for 62% of its revenue. As CoreWeave approaches a potential $35 billion IPO, its story represents both the tremendous opportunities and substantial risks in the rapidly expanding multibillion-dollar GPU cloud market.

To understand CoreWeave's importance, imagine what happened during historical gold rushes. The people who consistently made money weren't necessarily the miners themselves, but those who sold the picks, shovels, and supplies the miners needed. In today's AI gold rush, the "miners" are companies building AI applications, and CoreWeave provides the essential "picks and shovels" – the GPU computing power these companies desperately need.

When the generative AI boom began (with systems like ChatGPT), it created an enormous demand for specialized computer chips called GPUs (Graphics Processing Units), particularly Nvidia's powerful H100 and A100 models. These chips are essential for creating and running AI systems, but there simply weren't enough to go around. Most companies faced waiting periods of 18-24 months to get these chips.

CoreWeave had a significant head start. By 2024, they had already accumulated 45,000+ GPUs – representing about 15-20% of all AI-grade GPUs available in North America. They achieved this through smart timing: buying up equipment from struggling cryptocurrency mining operations and forming strategic partnerships with Nvidia before the AI boom hit full force.

🌱 Help us help you: Coffee or Shop finds fund our progress and your gains! 🌱

Founding Story

In the dynamic world of tech startups, where familiar narratives often dominate, CoreWeave's journey stands as a beacon of inspiration. Breaking away from the conventional paths trodden by Stanford graduates and seasoned tech executives, this remarkable story begins not in Silicon Valley but in a humble New Jersey garage. Here, three energy traders—Michael Intrator, Brian Venturo, and Brannin McBee—embarked on an unexpected adventure that would redefine their futures.

Their tale began with a simple experiment: purchasing a GPU for cryptocurrency mining during Bitcoin's ascent in 2016. What started as a mere curiosity quickly transformed into discovery when they realized the potential of Ethereum mining to generate returns swiftly. This revelation came at a pivotal moment when their hedge fund faced challenges—a situation they embraced not as a defeat but as an opportunity.

With courage and vision, they closed Hudson Ridge Asset Management LLC in September 2017 and launched Atlantic Crypto from Venturo's grandfather’s garage. Their early days were marked by relentless dedication—constructing GPU racks and navigating volatile markets—all while driven by an unwavering belief in their venture.

Their timing was impeccable; the cryptocurrency boom fueled unprecedented demand for computing power. Without traditional venture capital backing, Atlantic Crypto scaled rapidly through reinvestment into hardware infrastructure. By early 2019, this foundation laid the groundwork for something greater than they had imagined.

February 2019 marked another turning point with a $1.2 million seed round that propelled them beyond crypto mining toward broader horizons like AI and machine learning applications—a strategic pivot that proved transformative.

Rebranding as CoreWeave in October 2021 signaled their evolution into serious contenders within cloud infrastructure amidst an AI revolution demanding vast GPU resources—a need perfectly aligned with what they'd built over years dedicatedly focused on high-performance computing solutions tailored specifically for these emerging technologies demands.

CoreWeave’s story is one of resilience against high odds. It exemplifies how unconventional beginnings can lead to extraordinary achievements when fueled by passion and innovative thinking, unbound by traditional constraints or industry norms previously dominated by giants like AWS or Google Cloud. This success is largely due to the sheer determination consistently exhibited throughout each phase of their journey.

Product Ecosystem

CoreWeave allows customers to lease powerful NVIDIA GPUs in the cloud rather than purchasing and maintaining their hardware. This approach transforms what would traditionally require years of planning and millions in capital expenditure into an on-demand service accessible to companies of all sizes. With mega clusters containing over 100,000 GPUs, CoreWeave provides the computational power needed for today's most demanding AI applications.

Think of CoreWeave as providing the digital equivalent of an electrical grid for AI computing. Just as businesses don't build their power plants but instead connect to the electrical grid, CoreWeave lets AI companies tap into massive GPU computing power without building their infrastructure.

1. Compute Services

CoreWeave's primary offering is access to powerful graphics processing units (GPUs) in the cloud. While traditional computers use central processing units (CPUs) for general computing tasks, GPUs excel at performing many calculations simultaneously, making them ideal for AI workloads.

In simple terms: Think of a CPU as a few very smart workers who can handle complex tasks one at a time, while a GPU is like having thousands of simpler workers tackling parts of a problem all at once. For AI tasks that involve processing massive amounts of data in parallel, this makes GPUs dramatically faster.

CoreWeave offers various NVIDIA GPU models:

The H100/H200 series: The most powerful GPUs available, used for training the largest AI models

A100 series: High-performance GPUs for demanding AI applications

L40/L40S and A40: Mid-range options for various AI and rendering tasks

RTX series: More affordable options for less intensive workloads

Real-world example: When Inflection AI needed to build their Pi chatbot (similar to ChatGPT), they used CoreWeave's H100 GPUs to train a 175 billion-parameter AI model in just 11 days—a task that would have taken months on traditional hardware.

2. Storage Services

AI workloads require not just computing power but also fast access to enormous amounts of data. CoreWeave offers three main types of storage:

Local Storage: Fast storage directly attached to the compute resources, like having a hard drive in your computer

Object Storage: Flexible storage for large amounts of unstructured data (like images, videos, or documents)

Distributed File Storage: Storage that spans multiple servers and locations for reliability and performance

Real-world example: When training an AI model to recognize objects in images, a company might store millions of training images in CoreWeave's object storage, then use distributed file storage to make these images quickly accessible to hundreds of GPUs working together on the training task.

3. Networking Services

For AI workloads that use multiple GPUs simultaneously, the speed at which these GPUs can communicate with each other is crucial. CoreWeave offers specialized networking options:

InfiniBand Networking: Ultra-fast connections (up to 400 gigabytes per second) that allow thousands of GPUs to work together seamlessly

Virtual Private Cloud: Secure, isolated network environments for customer workloads

Direct Connect: Dedicated connections between CoreWeave's infrastructure and a customer's data centers

Real-world example: When rendering a complex visual effects scene for a movie, a studio might need hundreds of GPUs working together. InfiniBand networking allows these GPUs to share data almost instantly, reducing rendering time from weeks to hours.

4. Managed Services

CoreWeave handles the complex infrastructure management so customers can focus on their AI applications:

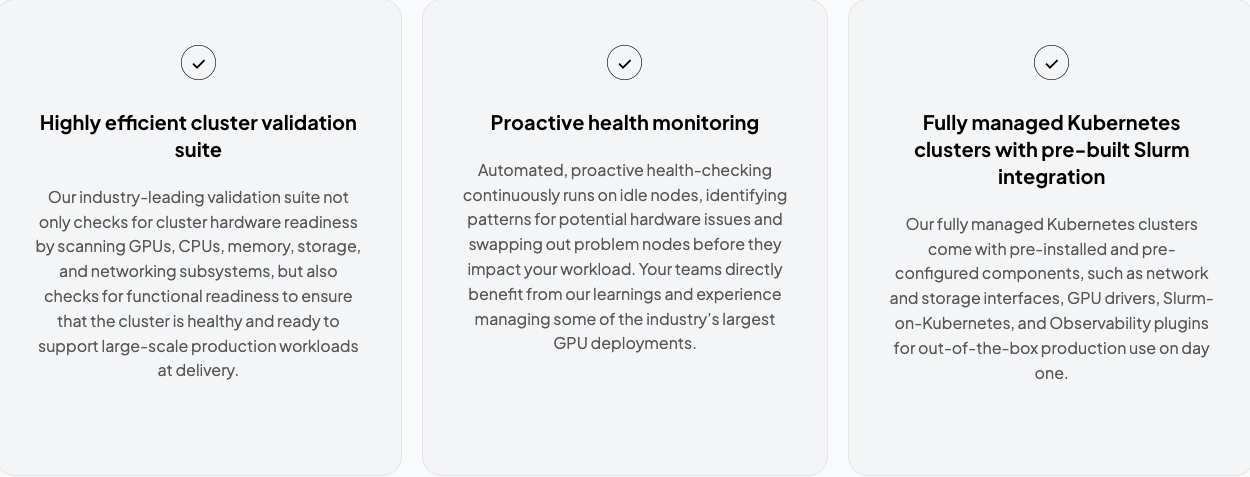

Managed Kubernetes: A system that automatically deploys, scales, and manages the containers that run customer applications

Slurm on Kubernetes: A specialized workload manager for high-performance computing tasks

Real-world example: Rather than hiring a team of infrastructure engineers to manage GPU clusters, an AI startup can use CoreWeave's managed services to automatically scale their computing resources up when training a new model and down when the training is complete.

5. Platform Tools

These tools help customers monitor and manage their CoreWeave resources:

LifeCycle Controllers: Tools to manage the creation and deletion of computing resources

Tensorizer: A tool that optimizes data for AI training

Observability Stack: Tools to monitor performance and troubleshoot issues

Put the market on autopilot, experience the Best Platform with TC2000

Explore now →

Technical Architecture

Kubernetes and Bare Metal

At the foundation of CoreWeave's architecture is Kubernetes—an open-source system for automating deployment and management of applications. CoreWeave runs Kubernetes directly on "bare metal" (physical servers) rather than using virtualization.

In simple terms: Traditional cloud providers add extra software layers between your application and the physical hardware, which can slow things down. CoreWeave's approach is like removing these middlemen, giving your AI applications direct access to the GPU's full power.

Real-world example: When running AI inference (using a trained model to generate responses), this direct access can reduce response time from seconds to milliseconds—the difference between a chatbot that feels sluggish and one that responds instantly.

KubeVirt for Virtual Servers

While bare metal provides maximum performance, CoreWeave uses KubeVirt to offer the flexibility of virtual servers when needed.

In simple terms: This gives customers the best of both worlds—the performance of direct hardware access with the convenience of virtual machines that can be quickly created, modified, or deleted.

Real-world example: A company might use a virtual server with 8 GPUs for daily AI model updates, but temporarily scale up to 100 GPUs for a major model redesign, then scale back down when finished—all without changing their application code.

CoreWeave's networking is designed specifically for the unique demands of AI workloads:

InfiniBand: A specialized networking technology that provides extremely low latency (delay) and high throughput (amount of data transferred)

HPC over Ethernet: A more standard networking approach that still delivers high-performance

L2VPC: A networking option for connecting to external systems

In simple terms: Traditional networking is like a highway system with traffic lights and intersections that slow data down. InfiniBand is like a dedicated high-speed rail system where data travels directly between points at maximum speed.

Real-world example: When training large language models like GPT-4, thousands of GPUs need to constantly share updates. With standard networking, this communication becomes a bottleneck. CoreWeave's InfiniBand networking allows these GPUs to communicate so quickly that they can effectively work as one massive supercomputer.

Storage Architecture

CoreWeave's storage is distributed across multiple servers and data centers, providing both performance and reliability.

In simple terms: Rather than storing all data in one place (like a single hard drive that could fail), CoreWeave spreads data across many storage devices in different locations. If one fails, the data remains accessible from other locations.

Real-world example: A company training an AI model on petabytes of data (millions of gigabytes) needs both high-speed access to this data and assurance that a hardware failure won't interrupt training that might take weeks. CoreWeave's distributed storage provides both the performance and reliability needed for such mission-critical workloads.

By combining these specialized technologies, CoreWeave has created an infrastructure specifically optimized for AI workloads—delivering performance that general-purpose cloud providers cannot match while making supercomputing-level resources accessible to companies of all sizes.

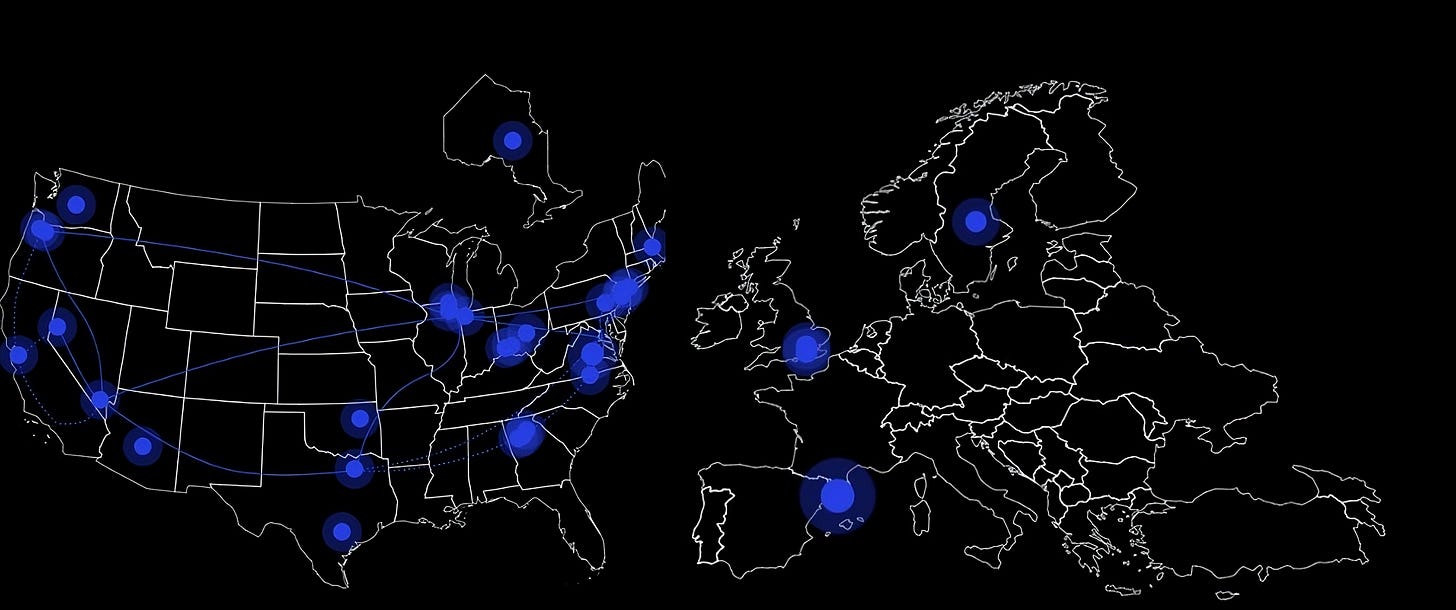

CoreWeave operates over 28 data centers across North America and Europe with hundreds of megawatts of capacity. The company offers both public and dedicated cloud deployments, with direct connections through its managed network backbone for secure, high-speed data access.

Market Dynamics

CoreWeave operates in the rapidly expanding GPU cloud computing market, valued at approximately $25.5 billion in 2024 and projected to grow at a compound annual growth rate (CAGR) of 34.8% through 2030. This explosive growth is driven by the generative AI revolution, which has created unprecedented demand for specialized computing infrastructure.

The broader AI infrastructure market represents an even larger opportunity. As companies across industries integrate AI into their operations, global spending on AI-related infrastructure is expected to exceed $300 billion before the end of the decade. This includes not only cloud services but also the entire ecosystem of hardware, software, and services needed to support AI development and deployment.

What makes this market particularly attractive is its structural supply constraints. The production of advanced GPUs is limited by manufacturing capacity, with NVIDIA, the dominant supplier, unable to meet current demand. This supply-demand imbalance has created a significant competitive advantage for companies like CoreWeave which secured large GPU inventories before the AI boom reached its peak.

Customer Base

CoreWeave's customer base can be segmented into four primary categories:

1. Hyperscalers (60-65% of Revenue)

Microsoft represents CoreWeave's largest customer, accounting for approximately 62% of the company's revenue. This relationship began when Microsoft needed to rapidly scale GPU capacity for its AI initiatives, including supporting OpenAI's ChatGPT and its own Copilot products. Unable to build out sufficient capacity quickly enough through its Azure cloud, Microsoft turned to CoreWeave as a specialized provider.

Other major technology companies also leverage CoreWeave's infrastructure to supplement their cloud offerings during this period of extreme GPU scarcity. This arrangement allows these companies to meet their customers' AI computing needs without the multi-year lead times required to build their own GPU data centers.

Example: When Microsoft needed to rapidly scale support for ChatGPT and Copilot, CoreWeave provided thousands of NVIDIA H100 GPUs within weeks rather than the 18+ months it would have taken Microsoft to build equivalent capacity.

2. AI Startups (20-25% of Revenue)

AI-focused startups represent CoreWeave's second-largest customer segment. These companies typically lack the capital and expertise to build their own GPU infrastructure but require massive computing resources to train and run their AI models.

Example: Inflection AI, creator of the Pi personal AI assistant, used CoreWeave's infrastructure to train its 175 billion parameter model. The training process required 3,500 H100 GPUs running continuously for 11 days—a computing task that would have been prohibitively expensive for a startup to support with owned hardware.

Similarly, Bit192 built a 20 billion parameter Japanese language model from scratch using CoreWeave's networking and GPU infrastructure, demonstrating how specialized AI companies can leverage CoreWeave to compete with much larger organizations.

3. Enterprise AI Adopters (10-15% of Revenue)

As traditional enterprises across industries—from financial services to healthcare to manufacturing—integrate AI into their operations, they face the challenge of accessing sufficient GPU computing power. Rather than making massive capital investments in rapidly depreciating hardware, many choose to leverage specialized cloud providers like CoreWeave.

Example: Financial institutions use CoreWeave's infrastructure to run risk models and fraud detection systems that leverage machine learning. These workloads require significant GPU computing power but may run intermittently, making cloud-based solutions more economical than owned infrastructure.

4. Visual Effects and Media (5-10% of Revenue)

CoreWeave's origins in GPU computing made it a natural fit for visual effects studios and media companies that require massive rendering capabilities. While this segment represents a smaller portion of current revenue, it provides diversification beyond pure AI workloads.

Example: Visual effects studios use CoreWeave's GPU clusters to render complex CGI sequences for films and television, reducing rendering time from weeks to hours compared to traditional CPU-based rendering farms.

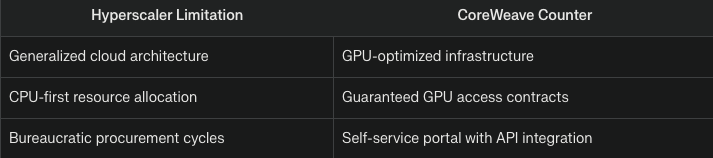

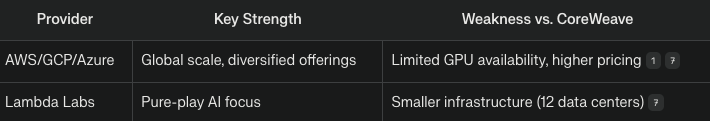

Competition

CoreWeave competes in the rapidly growing GPU cloud computing market, facing competition from both established cloud giants and specialized GPU infrastructure providers. The company's unique positioning and specialized focus give it distinct advantages while also presenting competitive challenges.

Traditional Cloud Providers

Amazon Web Services (AWS)

AWS dominates the general cloud computing market and offers GPU instances through services like EC2 P4d and P5 instances. However, AWS faces several limitations in the AI infrastructure space:

Limited GPU Availability: AWS frequently caps GPU rentals at 90 days and implements strict quotas on high-end GPUs

Higher Costs: AWS GPU instances typically cost 30-50% more than CoreWeave's equivalent offerings

Virtualization Overhead: AWS's multi-tenant architecture introduces performance penalties compared to CoreWeave's bare metal approach

General-Purpose Design: AWS infrastructure is designed for diverse workloads rather than optimized specifically for GPU computing

For example, when Anthropic was scaling its Claude AI assistant, AWS could not provide sufficient GPU capacity on short notice, forcing the company to seek alternative providers for expansion.

Google Cloud Platform (GCP)

Google Cloud offers A3 instances with NVIDIA H100 GPUs and has made significant investments in AI infrastructure. Google's competitive position includes:

TPU Technology: Google's custom Tensor Processing Units provide an alternative to NVIDIA GPUs for some workloads

Vertical Integration: Google develops both AI models and infrastructure, creating synergies

Supply Constraints: Despite its resources, Google faces the same industry-wide GPU supply limitations

However, Google's infrastructure is primarily optimized for its own AI needs rather than external customers, limiting its flexibility for specialized AI workloads.

Microsoft Azure

Microsoft represents both a major competitor and CoreWeave's largest customer. This complex relationship includes:

Azure AI Infrastructure: Azure offers NVIDIA H100 and A100 instances directly to customers

Supply Gap: Unable to meet demand through its infrastructure, Microsoft leverages CoreWeave to supplement capacity

Strategic Investment: Microsoft has invested in CoreWeave, creating a partnership rather than pure competition

This relationship highlights the severe GPU supply constraints even hyperscalers face, with Microsoft needing to partner with CoreWeave despite competing in the same market.

Specialized GPU Cloud Providers

Lambda focuses on GPU cloud services for AI researchers and smaller companies.

Compared to CoreWeave:

Scale Limitation: Lambda operates at a significantly smaller scale (thousands vs. CoreWeave's 250,000 GPUs)

Research Focus: Lambda targets academic and research workloads rather than enterprise-scale AI deployments

Integrated Hardware: Lambda also sells physical GPU servers, creating a different business model

While Lambda serves the AI community effectively, it lacks the scale to support the largest AI training and inference workloads that CoreWeave handles.

Paperspace (Acquired by DigitalOcean)

Paperspace provides GPU cloud services with a focus on accessibility and ease of use:

Developer Experience: Paperspace offers more user-friendly interfaces than CoreWeave's Kubernetes-based approach

Limited Scale: Like Lambda, Paperspace cannot support the largest AI workloads

Acquisition Impact: DigitalOcean's acquisition may shift Paperspace's strategic direction

Paperspace competes more directly with CoreWeave for smaller AI workloads and individual developers rather than enterprise-scale deployments.

Crusoe repurposes stranded energy from oil and gas operations to power GPU computing:

Energy Innovation: Crusoe's approach provides cost advantages through lower energy expenses

Environmental Positioning: The company appeals to environmentally conscious customers

Emerging Competitor: Still scaling its operations and GPU fleet

Crusoe represents an emerging competitive threat with a unique energy-focused approach to GPU cloud services.

Business Model

The entire business model relies on their product ecosystem, detailed in the previous part of this article. CoreWeave operates a specialized cloud platform offering GPU-accelerated compute resources for AI training, inference, and high-performance workloads.

Key aspects:

Pay-as-you-go pricing: Customers rent GPU clusters (e.g., Nvidia H100, A100) by the hour, with prices tied to market demand and hardware performance.

Revenue streams: 98% from computing rentals, supplemented by storage and networking services.

CoreWeave employs a value-based pricing strategy that typically offers 30-50% cost savings compared to equivalent GPU instances from major cloud providers while maintaining impressive 76% gross margins. This pricing advantage stems from:

Infrastructure Optimization: Purpose-built data centers designed specifically for GPU workloads

Higher Utilization: Achieving 92% GPU utilization versus the industry average of 65%

Strategic GPU Acquisition: Securing large GPU inventories before the AI boom drove prices higher

CoreWeave's growth trajectory has been extraordinary:

2020: ~$15 million in revenue, primarily from cryptocurrency mining and early AI customers

2022: ~$200 million in revenue, following the pivot to AI infrastructure

2023: ~$700 million in revenue, driven by the generative AI boom

2024: ~$2.5 billion in projected revenue, with a $7 billion contracted backlog

This represents one of the fastest growth rates in the infrastructure sector, with revenue increasing approximately 250% year-over-year.

Major contracts include:

Microsoft: A multi-year, multi-billion dollar agreement to provide GPU capacity for Microsoft's AI initiatives, including ChatGPT and Copilot

Inflection AI: A $500+ million contract to provide H100 GPUs for training the Pi personal AI assistant

Anthropic: A significant agreement to support Claude AI training and inference

Stability AI: A major contract to power Stable Diffusion model development

Valuation

Our strategy, as always, is to allow emotions to settle and take a prudent approach by waiting one to two months before considering the purchase of even a single share of this company. This deliberate pause is essential; it enables us to let the initial excitement and hype surrounding the stock subside, providing us with a clearer perspective on its true market value. By doing so, we can closely observe how the stock behaves technically in the critical weeks following its release.

Although we find the underlying business model intriguing and full of potential, it is crucial to recognize that the current valuation appears significantly high. This elevated price point leaves us with limited room for maneuvering in terms of investment strategy. We must remain disciplined and avoid making hasty decisions driven by market sentiment. Instead, we should focus on gathering more data and insights that will responsibly inform our decision-making process, ensuring that our investments are grounded in solid analysis rather than fleeting emotions or trends.

2021: $50 million from Magnetar Capital at a $350 million valuation.

2022: $221 million Series A at a $2 billion valuation.

2023: $2.3 billion Series B at a $7 billion valuation.

2024: $2.5 billion Series C at a $19 billion valuation and $7.9 billion in debt facilities for data center expansion.

2025: IPO at $35 billion valuation.

If you've made it this far in our CoreWeave exploration (and haven't yet started mining crypto in your basement or building a data center in your garage 😜⛏️), you might enjoy this final thought:

They say the California Gold Rush made more millionaires among those selling pickaxes than those mining gold. In today's AI gold rush, CoreWeave isn't just selling the pickaxes—they're building and maintaining the entire mining operation while everyone else frantically searches for their AI nuggets.

And if you're still wondering whether to invest your life savings in GPU farms, remember: the only thing that scales faster than CoreWeave's infrastructure is the number of AI startups claiming they'll revolutionize everything from toothbrushes to tax preparation. At least CoreWeave has the hardware to back up its claims!

In all seriousness, as AI continues its march into every aspect of our digital lives, companies like CoreWeave that build the fundamental infrastructure will remain critical, often unseen heroes of the AI revolution. Whether they become the AWS of AI computing or face new challenges in this rapidly evolving landscape remains to be seen—but their journey so far suggests they're well-positioned for whatever comes next.

After all, in the world of AI infrastructure, it's not just about having the most GPUs—it's about having the vision to see where computing needs to go before everyone else gets there.