Mikkel Frimer-Rasmussen

Improving Memory in Large Language Models - by Creating Titans?

Google’s Titan architecture combines short-term and long-term memory modules, hence Titans can retain and utilize historical context like never before.

Introduction: The field of large language models (LLMs) is evolving rapidly, with recent innovations pushing boundaries in natural language processing, code generation, and more. One groundbreaking advancement, Google’s Titan architecture, promises to tackle the persistent challenge of memory limitations in LLMs. By combining short-term and long-term memory modules, Titans can retain and utilize historical context like never before. This article dives into the Titan architecture, its implications for the AI landscape, and its potential to transform industries.

Section 1: What Makes the Titan Architecture Unique? The Titan architecture is a new way to improve how large language models (LLMs) handle and remember information. Traditional models use a system called "attention" to keep track of recent information (short-term memory), but Titans add a new, smarter long-term memory. This long-term memory allows the model to store and recall important details from earlier, much like how humans can remember important events from the past.

Dynamic Memorization: Titans focus on surprising or unexpected details, much like how humans vividly remember shocking events. This means they prioritize storing unusual or critical data.

Better Context Handling: Titans can look at and analyze much longer pieces of text or data, understanding them more thoroughly.

Variants for Flexibility: The Titan architecture comes in three different types, allowing researchers to try various methods for improving memory in AI models.

In short, Titans make AI more intelligent and adaptable by letting it remember and use past information effectively.

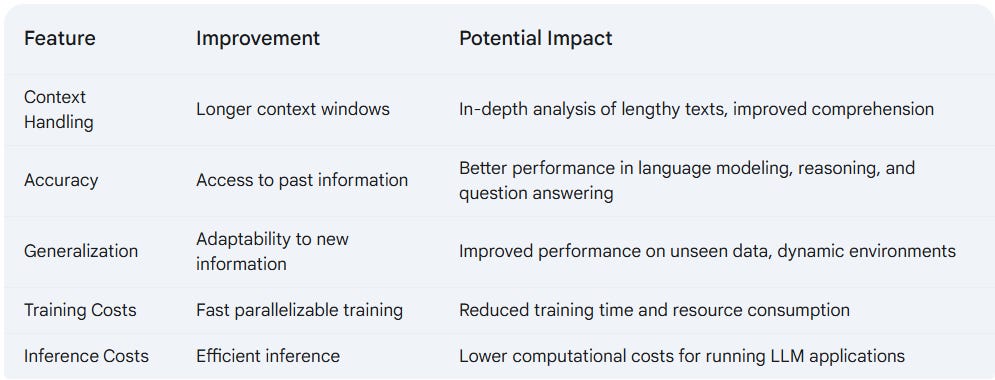

Section 2: Implications for Large Language Models The Titan architecture redefines what’s possible for LLMs by addressing key trade-offs:

Improved Accuracy: Access to both recent and historical data boosts performance in reasoning and question answering.

Generalization: Titans adapt to unseen data more effectively, making them suitable for dynamic environments.

Efficiency Gains: Fast, parallelizable training reduces development time and resource demands, while efficient inference lowers computational costs.

These advancements make Titans particularly valuable for applications requiring both speed and precision.

Section 3: Transformative Applications of Titans Titans’ capabilities unlock new opportunities across industries:

Customer Service: AI agents can remember past interactions, delivering personalized and efficient support.

Education: Titans enable adaptive learning systems that cater to individual student needs.

Healthcare: By analyzing extensive medical histories, Titans can support doctors in diagnosing and treating patients more effectively.

These examples showcase the broad potential of Titans to enhance user experiences and solve complex challenges.

Section 4: Business Impacts and Competitive Advantages The introduction of Titans could reshape the business landscape:

New Opportunities: Titans enable advanced applications in personalized services, complex data analysis, and more.

Cost-Effective AI: Lower training and inference costs make AI accessible to smaller enterprises.

Industry Leadership: Early adopters like Google could gain a competitive edge, pushing rivals to innovate in response.

For example, Google may integrate Titans into Gemini 2 for enhanced dialogues, while competitors like OpenAI could adopt similar strategies to stay competitive.

Section 5: Challenges and Considerations Despite their promise, Titans come with potential hurdles:

Scalability: Managing extremely long contexts remains a challenge.

Memory Complexity: Efficiently retrieving data from long-term memory modules could become cumbersome.

Unexpected Biases: The surprise-based memorization mechanism might introduce unanticipated behaviors in certain scenarios.

Addressing these challenges will be key to maximizing Titans’ impact.

Original paper on ResearchGate: Titans: Learning to Memorize at Test Time

Conclusion: Titans’ potential to reshape industries and enhance AI functionality is undeniable.

As the AI field continues to evolve, Titans’ ability to learn and memorize dynamically marks a significant step toward creating intelligent systems that interact with the world in increasingly human-like ways.

Subscribe to Mikkel Frimer-Rasmussen

Launched 3 months ago

Generative AI consultant based in Copenhagen, Denmark. I write about Gen AI, how I use it, what I use it for and sometimes great client stories to inspire you.