Tester, Architect & PM walked into a codebase: How to make vibe coding work

From chaotic prompting to systematic collaboration: a better way to work with AI assistants

Note: I don't particularly like the term "vibe coding" - but now that it's in general usage, I've reluctantly adopted it. At its core, this approach is simply about aligning, understanding, and implementing with AI agents.

How I Developed This Approach

Key Principle: "An AI assistant is neither omniscient nor deficient—it simply reflects the quality and clarity of the context you provide it."

After countless hours working with AI coding assistants, I kept hitting the same frustrations. The AI would misunderstand requirements, forget context, or produce inconsistent code.

I needed a better way to collaborate with these tools. Through trial and error, I developed this approach based on a simple insight: AI assistants perform exactly as well as the context you provide them. They're not magical oracles but more like junior developers who need clear guidance and structure.

The Setup Phase: Building Your Foundation

Key Principle: "Invest time in setting clear standards and creating comprehensive documentation upfront—it's the foundation that makes every subsequent interaction with your AI assistant more effective."

I start by establishing a solid foundation. This is a one-time investment that pays dividends throughout the project.

Project Repository Configuration

First, I set up the technical infrastructure:

A GitHub repository with branch protection rules

PR templates aligned with quality standards

CI/CD pipelines for automated testing

Static type-checking configuration (TypeScript, mypy, etc.)

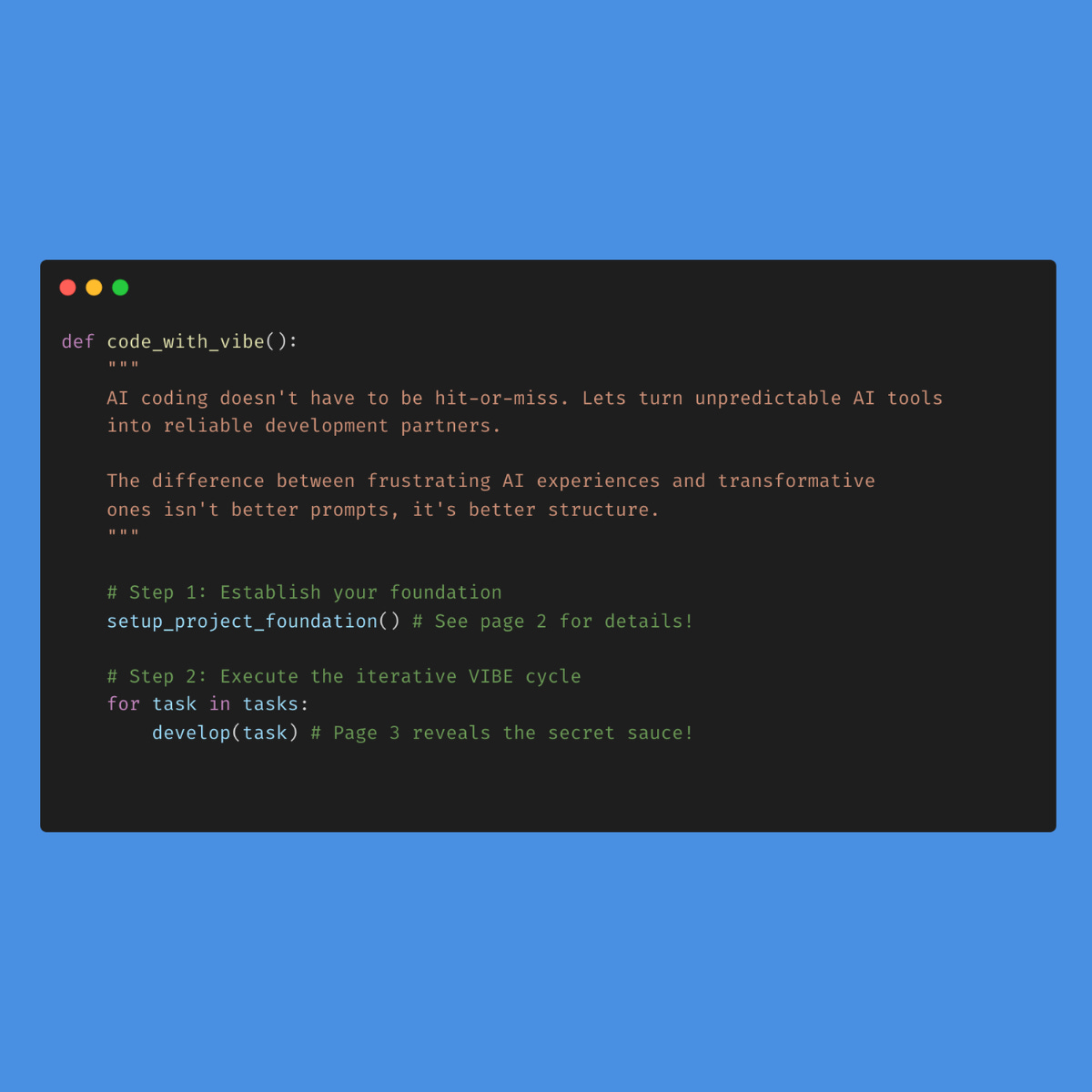

The Four Guidance Documents

The heart of this approach is creating four comprehensive documents:

Project Overview

High-level description of what we're building and why

Business objectives and core functionality

High-level description of various sub-systems and components

Technical Architecture (as an architect)

Coding standards and style guidelines

Design principles (SOLID, YAGNI, DRY)

Implementation patterns to follow

Technology stack with specific version requirements

Design Philosophy

Testing Guidelines (as a tester)

Test strategy and coverage requirements

Unit test structure and patterns

Edge cases to consider and handle

Integration testing approach

Product Management (as a product manager)

Prioritization guidelines

Iterative approach explanation

Risk management strategy

Strategy to break the project into atomic, achievable, and working milestones

These guidance documents are configured as automated context in my AI tools. In Cursor, add them to .cursor/rules or .windsurfrules in Windsurf so they're automatically included in every session. This ensures your standards are consistently applied across all AI interactions without manual copy-pasting.

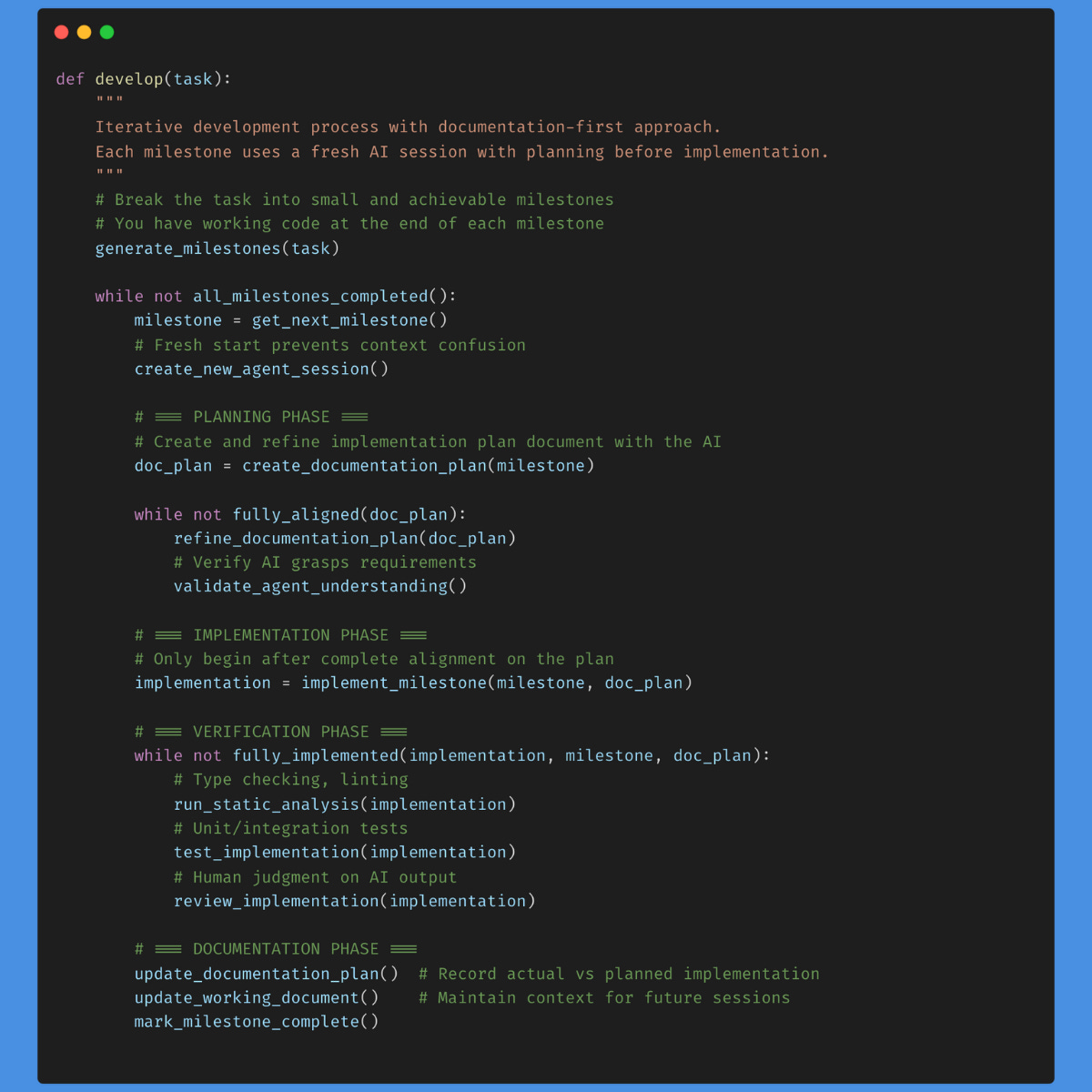

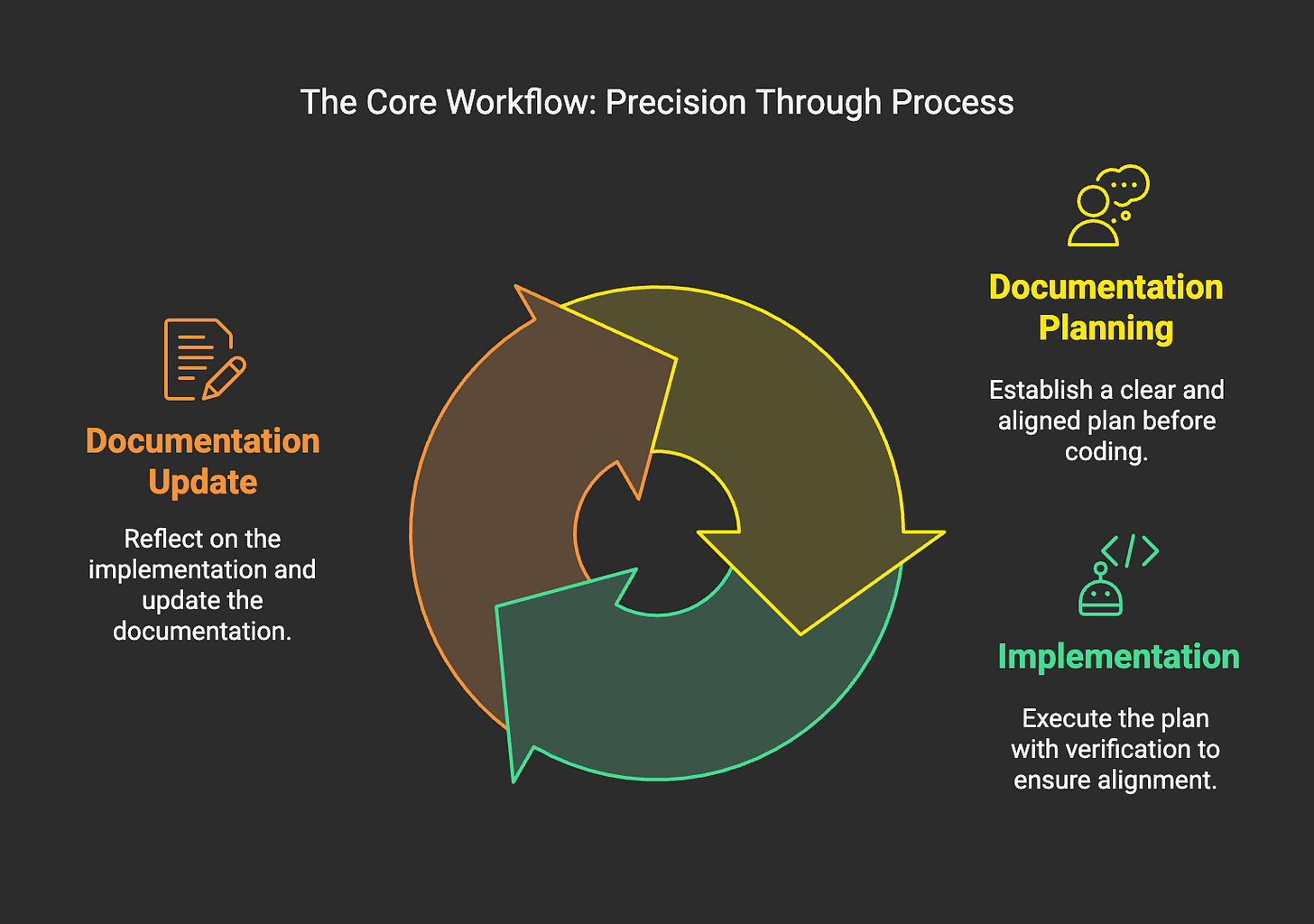

The Development Cycle: Plan, Implement, Document

Key Principle: "Never write a line of code until you've achieved complete alignment on what you're building—misunderstandings corrected during planning cost minutes, while those caught during implementation cost hours."

For each task, I follow a consistent process:

Phase 1: Documentation Planning Phase

Key Principle: "The documentation plan is your shared mental model with the AI—refine it until there's zero ambiguity, then use it as a portable context across tools and sessions."

Before writing any code, I work with the AI to create a detailed implementation plan document. This serves multiple purposes:

Clarifies requirements - The back-and-forth forces me to be precise

Verifies AI understanding - I can see if the AI has misunderstood anything

Creates portable context - I can move this between different AI tools or sessions

Manages context window limitations - Distills essential information into a compact form

I iterate on this plan until I'm 100% aligned with the AI's understanding. Sometimes, this takes several revisions, and that's fine—it's much cheaper to fix misunderstandings here than after the code is written.

Why This Planning Phase Is Worth It

The documentation planning phase might seem tedious, but it addresses the core limitations of current AI tools:

Context window limitations - Most AI assistants have limited memory

Tool-switching - Moving between Cursor, ChatGPT, Claude, etc., loses context

Misalignment - AI might think it understands requirements when it doesn't

Phase 2: Implementation Phase

Key Principle: "With thorough planning complete, implementation becomes verification rather than exploration—your guardrails of static typing and automated tests ensure the code matches your intent."

Only after we're fully aligned does the AI write actual code. Because of our thorough planning, this phase typically goes smoothly:

The AI implements based on our agreed plan

Static type checking catches many potential issues

Tests verify functionality against requirements

I review the implementation, but most issues are caught during planning

Phase 3: Documentation Update

Key Principle: "Documentation isn't just a record of what you built, but the evolving context that guides what you'll build next—maintain it with the same care you'd maintain production code."

After implementation, I ask the AI to update our documentation:

Record what we actually built versus what we planned

Note any deviations and why they occurred

Update the working document for future milestones

Real-World Results

Key Principle: "Success isn't measured by how intelligent your AI seems, but by how consistently it produces quality code that requires minimal rework—predictability trumps brilliance."

After adopting this approach, I've seen dramatic improvements:

Code quality is consistently higher

Iterations are more predictable and quicker

Context switching between tools doesn't derail progress

The AI rarely misunderstands requirements

I spend less time fixing and more time building

The Philosophy Behind This Approach

Key Principle: "Treat AI as a junior engineer that needs context and guidance, not as a magical oracle that should read your mind—the best AI collaborations are structured conversations, not one-off prompts."

The key insight is treating AI as a collaborator, not a magic solution generator. You get much better results by providing clear context, guidelines, and feedback. I've found that most "AI failures" are actually failures of context or instruction.

The AI performs remarkably well when I'm explicit about requirements and design principles. This approach takes the best of human software engineering practices—clear requirements, consistent architecture, test-driven development—and adapts them to the AI-assisted development workflow.

Don’t just take my word for it; try it out yourself!

Key Principle: "Begin with thorough foundation-setting rather than rushing to implement—the time invested in clear documentation and project structure will be returned tenfold through smoother development cycles."

If you want to try this approach:

Start with creating your four guidance documents

Break your project into small, focused milestones

For each milestone, create a documentation plan before any code

Iterate until you're fully aligned with the AI

Only then implement, test, and document

The initial setup takes time, but the efficiency gains in development make it worthwhile. You'll find yourself spending less time fixing AI misunderstandings and more time making actual progress.

Remember: the AI is as good as the context you provide it. Give it the right foundation, and it becomes an invaluable partner in your development process.